| Full name: | InterpreterGlove |

| Start date: | 2012. 01. 09. |

| End date: | 2014. 31. 08. |

| Participants: |

|

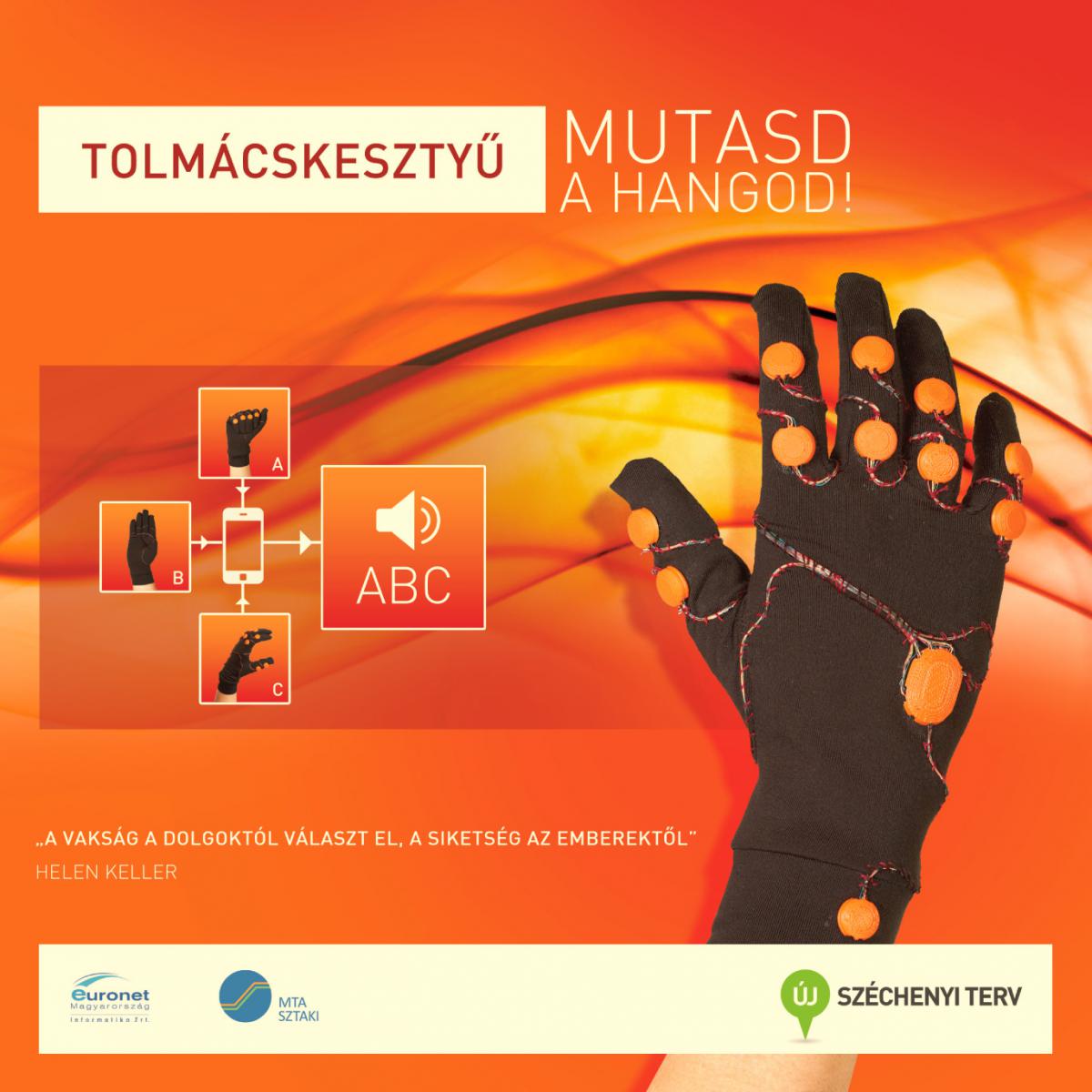

We create an assistive tool to improve life for hearing- and speech-impaired people enabling them to easily get in touch with the non-disabled using hand gestures. The project achieves this by creating an innovative hardware-software ecosystem consisting of wearable motion-capture gloves for motion capturing and a software solution for hand gesture recognition and natural language processing. This integrated tool operates as a simultaneous interpreter helping the disabled to communicate using their native language, the sign language. The InterpreterGlove reads out the signed text loud, so the disabled and hearing-abled (without any sign language knowledge) can fluently communicate with each other.

Deaf-mute people's communication potential will be highly expanded by this device. Currently members of this disability group either need to go through cumbersome explanations to describe their intent or they need to be assisted by a human sign language interpreter. Using the InterpreterGlove advances their social integration as they are enabled to express themselves to non sign language speakers. Users of the device can handle situations they were formerly prevented from by their disability. The InterpreterGlove opens up possibilities like shopping, personal banking, taking part in education and much more beyond. It may also play a significant role in boosting the employment possibilities of the speech- and hearing-impaired people.

The glove prototype is made of breathable elastic material, the electronic parts and wiring is ergonomically designed to ensure seamless daily use. The hardware consists of twelve integrated nine-axis motion-tracking sensors and incorporates a wireless communication module on its main board. The integrated sensors involve a three-axis accelerometer, a three-axis gyroscope and a three-axis magnetometer. None of the sensors itself is capable of determining spatial orientation stably, but together they form a reliable triple to identify absolute 3D orientation. Signal fusion algorithms are used to calculate the accurate pitch, roll and yaw components of the sensors fixed on the glove. To determine the joints’ deflections, relative angles are calculated per sensor pairs. The glove firmware uses these relative 3D angles to compose the digital copy of the hand, which is denoted by a special gesture descriptor. Connected to the user's cell phone, the glove transmits the gesture descriptor stream, which is used by the mobile application to perform all the complex high-level computational tasks.

We specified a custom gesture descriptor. Its semantics describe the general human biomechanical characteristics and also align to the specific requirements of the glove output. A Hagdil (HAnd Gesture DescrIption Language) descriptor stores all substantial anatomical characteristics of the human hand: parallel and perpendicular positions of the fingers relative to the palm, wrist position and absolute position of the hand. We use it to encode the users’ gestures that are transmitted to the mobile device, so that the application has sufficient information about the actual state of the hand.

The mobile application processes the Hagdil gesture descriptor stream of the motion-capture glove, produces understandable text and reads it out as audible speech. The application logic of our automatic sign language interpreter software consists of two main algorithms: sign descriptor stream segmentation and text auto-correction algorithm. Both algorithms adapt to the unique characteristics of the glove hardware, the Hagdil semantics and the typical aspects of the produced descriptors.

Using the one-handed glove Dactyl signs and custom gestures are recognized and mapped to textual expressions by the mobile software.

For letter segmentation we examined diverse approaches. To find the transitions between signed letters and to retrieve the best quality Hagdil descriptor candidate, we experimented with the following algorithms: simple Hagdil similarity based-, repetition-based- and sliding window algorithms, and also tried segmenting along unkown descriptors, along known transitions and based on the kinetics of the fingers and hand. Raw input text is generated as a result of the segmentation, containing spelling errors caused by the human user’s inaccuracy and the underlying hardware. The auto-correction algorithm processes this defective raw text and transforms it into understandable words and sentences that can be read out loud by the speech synthetizer. As evaluated third party spell checkers could not cope with this task we created our custom auto-correction algorithm that is based on Levenshtein distance calculation supplemented with n-gram and confusion matrix searches and operations.

Dealing not only with pure hardware and software components’ interaction but assisting people using a complex hardware-software solution makes our work really challenging in many ways. We involved members of the targeted deaf community as pilot testers as early as possible. We learned a lot from their experiences and reactions, which deeply influenced our work and achievements. As we aim to support people with special needs, we allow high-level software customizability. To help this humanitarian assistive tool to spread easily we will publish it for all major mobile operating systems: Android, iOS and Windows Phone 8.

Dealing not only with pure hardware and software components’ interaction but assisting people using a complex hardware-software solution makes our work really challenging in many ways.

Although originally targeted to the deaf people, we realized that this tool has high potential for many other disabled target groups like speech-impaired, physically disabled people or those coming through a stroke during rehabilitation. We plan to address their special needs in future projects.

Exhibitions

- InnoTrends 2013

- Kutatók Éjszakája (Researchers' Night), 2014.09.27.

- Telenor Okostelefon Akadémia 7.0, 2014.09.18.

- Rehabilitációs Expo és Állásbörze, 2014.11.12.

Press and media

- Figyelő (print and online), 2013/27

- Index - Tech (online), 2013.10.20.

- Duna TV - Novum (TV), 2014.05.10.

- Népszabadság - Tudomány-Technika rovat (nyomtatott és online), 2014.08.14.

- Szeretlek Magyarország (online), 2014.08.15.

- HVG (online), 2014.08.16.

- RTLKlub - Híradó (TV), 2014.09.11.

- Hegyvidék TV - Híradó (TV), 2014.09.12.

- Klasszik Rádió - Digitália (radio), 2014.09.13.

- Kossuth Rádió - Közelről, Trend-idők (radio), 2014.09.19.

- TV2 Aktív (TV), 2014.09.19.

- Telenor press release (online), 2014.09.19.

- Mosaic online (online), 2014.09.19.

- Hirado.hu (online), 2014.09.19.

- Mediapiac.com (online), 2014.09.19.

- Kütyümagazin (online), 2014.09.19.

- Telenor Okostelefon Akadémia 7.0 (video), 2014.09.22.

- Computerworld (online), 2014.09.23.

- Kossuth Rádió - Szonda (radio), 2014.09.28.

- Lánchíd Rádió - Szaldó (radio), 2014.09.30.

- D1TV - Melanzs (TV), 2014.09.30.

- Prím (online), 2014.10.05.

- MTV M1 - Esély (TV), 2014.10.10.

- hír24 (TV), 2014.10.25.

The InterpreterGlove project is performed as a consortium of MTA SZTAKI and Euronet Magyarország Informatikai Zrt.